One day, a postdoc in our team shared a tribute in our Slack group using ChatGPT. It read: “Ode to Lord AI, may he reign for eternity.”

He jokingly remarked that if AI ever ruled the world, he’d recite this poem to pledge allegiance and save his own skin.

While he said it in jest, it highlighted a growing concern: many genuinely fear an all-seeing “Skynet-like” AI rule.

Given the pervasive pessimism surrounding AI and machine learning, I’ve often tried to present their positive impacts in my writings.

I aim to discuss it from a practical and optimistic perspective.

Yet, as we sing praises, it’s essential not to become complacent and ignore potential pitfalls.

As Lao Tzu once said, “Misfortune is what fortune depends upon; fortune is where misfortune hides beneath.”

Therefore, this concluding piece of the series will delve into the good, the bad, and the gray areas of AI.

Only through comprehensive and rational discussion can we stay grounded when challenges arise.

AI’s multifaceted role in fundamental research: It’s not just about efficiency

Our current AI landscape offers plenty of reasons for optimism, especially as it addresses longstanding “bottleneck” issues across various domains.

In biotech, AI is accelerating our understanding of protein folding in a more cost-effective manner, a pivotal step for drug discovery.

With AI’s assistance, we can quickly infer molecular structures and properties from vast amino acid sequences without investing heavily in biochemical experiments.

This not only drastically reduces production costs but also significantly boosts research efficiency.

Notably, AI’s current performance in this sector far surpasses traditional methods based purely on human knowledge.

In the realm of physics, AI has catalyzed breakthroughs, especially in nuclear fusion research.

If you recall our discussion in “Black holes and souffles,” you’d remember that, theoretically, nuclear fusion isn’t an insurmountable challenge—the sun is a natural fusion reactor after all.

Yet, the primary challenge of replicating nuclear fusion on Earth lies in our struggle to control plasma.

Now, with the aid of reinforcement learning—the same tech powering AlphaGo—we can predict and adjust for the flow of plasma in real-time. This has enabled nuclear fusion to produce more energy than it consumes for the first time.

Should nuclear fusion be successfully commercialized, it will emerge as an incredibly sustainable energy source.

Unlike nuclear fission, fusion is not just a clean energy form but also poses no risk of going awry.

Turning to my field of study, astronomy, AI harbors immense potential. You might’ve seen Netflix’s “Don’t Look Up” during the pandemic, dramatizing the threat of an asteroid collision.

Though the theme might seem clichéd, spotting Earth-threatening asteroids amid heaps of data in real life is genuinely challenging.

My colleagues and I are using computer vision techniques—akin to human-tracking in surveillance systems—to hunt these asteroids.

The double-edged sword of AI misuse

While machine learning has been propelling our society forward in many ways, not all of its effects are rosy.

Even though I’ve consistently emphasized the positive potential of AI, we must remain vigilant about the potential risks it brings.

In Malaysia, there’s a prevailing notion that machine learning is an elite and unreachable domain. It seems many feel it’s best to sit back and watch powerhouses like the U.S. and China duke it out in the AI arena.

However, the reality is, the entry barrier to machine learning is relatively low. While some research surely requires significant funding, the basic concepts are comprehensible even to high school students.

To highlight, Singapore is currently attracting global computer science PhD candidates with lucrative scholarships. If Malaysia doesn’t start giving AI and machine learning the attention they deserve, we risk falling behind on the global stage.

Our youth need to recognize that mastering machine learning isn’t an insurmountable goal but an essential skill for the future.

Due to the relative accessibility of machine learning, it also provides opportunities for those with malicious intent.

We often say that AI is becoming more “intelligent,” not implying it’s conscious, but in its ability to generalize from limited samples. Just like humans can identify a person from a few words, AI technologies are increasingly generalizing from minimal data.

Such advancements, while exciting, also carry societal risks, such as mimicking someone’s voice from a short audio clip. But as the saying goes, “for every move of the devil, there’s a countermove of the divine.” Society will eventually adapt.

Speaking of malefactors using AI for nefarious purposes, there are even graver concerns. I once discussed this with a colleague who uses AI for protein folding research. His primary concern is that this low-cost “drug-making” technology, while revolutionary for medicine, could also be used to design viruses at a minimal cost. The mere thought gave me the chills.

This brings me back to the beginning of the article. Sometimes, our discussions about AI can be sidetracked. While the prospects of massive unemployment or the rise of a “Skynet-like” sentient robot might be far-fetched, we must remember that humanity’s most significant threats often come from humans themselves.

Striving forward, balancing risks and opportunities

One might wonder, given all the uncertainties, why not just curtail the development of these technologies?

It’s a worthy question, one frequently debated in countries like the U.S.

The crux is that even if we restrict the development of large models, the fundamental issues remain. Smaller models, given the relatively low entry barrier, can be just as destructive.

On the flip side, we face a myriad of pressing and intricate problems, from combating climate change and seeking sustainable energy sources to treating cancer or even mitigating unforeseen yet substantial threats like asteroids.

In all these domains, machine learning represents our best shot. As such, we can’t throw the baby out with the bathwater due to certain potential risks.

Regardless of whether one believes we’re overreacting or being complacent, the fact is that we have opened the floodgate.

The best we can do now is to deepen our understanding of these emerging technologies, form our opinions, and not just be swayed by popular sentiment.

The uncertain future can be daunting. Yet, human progression has always been akin to tightrope walking at dizzying heights – always fraught with risks and uncertainties.

Stagnation is not an option. To maintain our balance, we must march forward with courage.

Read also:

- AI, ChatGPT, and my mom’s Roomba

- On AI and the soul-stirring char siu rice

- Redefining education in the AI era: the rise of the generalists

- AI in astronomy: Promise and the path forward

- Unpacking AI: Where opportunity meets responsibility

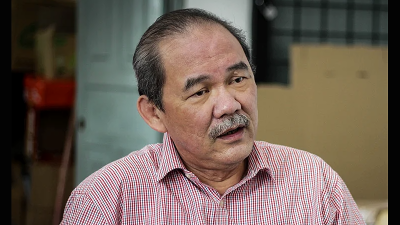

(Yuan-Sen Ting graduated from Chong Hwa Independent High School in Kuala Lumpur before earning his degree from Harvard University in 2017. Subsequently, he was honored with a Hubble Fellowship from NASA in 2019, allowing him to pursue post-doctoral research at the Institute for Advanced Study in Princeton. Currently, he serves as an associate professor at the Australian National University, splitting his time between the School of Computing and the Research School of Astrophysics and Astronomy. His primary focus is on utilizing advanced machine learning techniques for statistical inference in the realm of astronomical big data.)

ADVERTISEMENT

ADVERTISEMENT