SINGAPORE: The number of students caught plagiarizing and passing off content generated by artificial intelligence as their own work remains low, the public universities here said following a recent case at NTU.

But professors are watching closely for signs of misuse, warning that over-reliance on AI could undermine learning. Some are calling for more creative forms of assessment.

Their comments follow NTU’s decision to award these students zero marks for an assignment after discovering they had used generative AI tools in their work.

The move drew attention after one of the students posted about it on online forum Reddit, sparking debate about the growing role of AI in education and its impact on academic integrity.

All six universities here generally allow students to use gen AI to varying degrees, depending on the module or coursework.

To uphold academic integrity, students are required to declare when and how they use such tools.

In the past three years, Singapore Management University (SMU) recorded “less than a handful” of cases of AI-related academic misconduct, it said, without giving specific numbers.

Similarly, Singapore University of Technology and Design (SUTD) encountered a “handful of academic integrity cases, primarily involving plagiarism”, during the same time period.

At Singapore University of Social Sciences (SUSS), confirmed cases of academic dishonesty involving gen AI remain low, but the university has seen a “slight uptick” in such reports, partly due to heightened faculty vigilance and use of detection tools.

The other universities—NUS, Singapore Institute of Technology (SIT) and NTU—did not respond to queries about whether more students have been caught for flouting the rules using AI.

Recognizing that AI technologies are here to stay, universities said they are exploring better ways to integrate such tools meaningfully and critically into learning.

Gen AI refers to technologies that can produce human-like text, images or other content based on prompts.

Educational institutions worldwide have been grappling with balancing its challenges and opportunities, while maintaining academic integrity.

Faculty members here have flexibility to decide how AI can be used in their courses, as long as their decisions align with university-wide policies.

NUS allows AI use for take-home assignments if properly attributed, although instructors have to design complex tasks to prevent over-reliance.

For modules focused on core skills, assessments may be done in person or designed to go beyond AI’s capabilities.

At SMU, instructors inform students which AI tools are allowed, and guide them on their use, typically for idea generation or research-heavy projects outside exams.

SIT has reviewed assessments and trained staff to manage AI use, encouraging it in advanced courses like coding but restricting it in foundational ones, while SUTD has integrated gen AI into its design thinking curriculum to foster higher-order thinking.

The idea is to teach students when AI should be a tool, partner or avoided.

Universities said that students must ensure originality and credibility in their work.

The allure of gen AI

Students interviewed by The Straits Times, who requested to remain anonymous, said AI usage is widespread among their peers.

“Unfortunately, I think that (using gen AI) is the norm nowadays. It has become so rare to see people think on their own first before sending their assignments into ChatGPT,” said a 21-year-old fourth-year law student from SUSS.

Still, most students said they have a sense of when it is appropriate to use AI and when it is not. Several said they use it mainly for brainstorming, collating research, and sometimes while writing.

A 20-year-old Year 4 economics student from NTU said he does not see AI as anything more than a “really smart study buddy” that helps him clarify difficult concepts, similar to how one would consult a professor.

A third-year SMU political science student, 22, said she uses AI to fix her grammar before submitting her essays, but draws the line at copying essays wholesale from ChatGPT.

But some students said they would turn to AI to quickly complete general modules outside their specializations that they feel are not worth their personal effort.

AI may improve efficiency, but there is a “level of wisdom that needs to come with that usage”, said a third-year public policy and global affairs student from NTU.

The 21-year-old said she would not use ChatGPT for tasks that require her personal opinion, but would use it “judiciously” to complete administrative matters.

Other students said they avoid relying too much on AI, as they take pride in their work.

A 23-year-old Year 3 computer science student from SUTD said he wants to remain “self-disciplined” in his use of AI because he realized he needed to learn from his mistakes in order to improve academically.

More creativity needed in testing

Academics say universities must bring AI use into the open and rethink assessments to stay ahead.

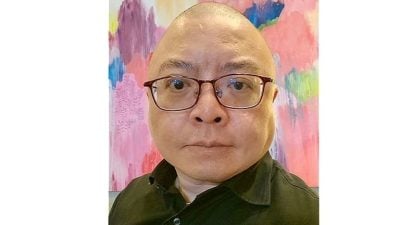

SMU Associate Professor of Marketing Education Seshan Ramaswami embraces AI tools, but with caveats.

In recent terms, he has encouraged students to use AI, provided they submit a full account of how tools were used and critique their outputs.

He also uses AI tools to create practice quizzes, and a chatbot that allows students to ask questions about his class materials. But he tells them not to “blindly trust” its responses.

The real danger lies in uncritical AI use, he added, which can weaken students’ judgment, clarity in writing or personal integrity.

Dr Ramaswami said he is “going to have to be even more thoughtful about the design of course assessments and pedagogy”.

He may explore methods like “hyper-local” assignments based on Singapore-specific contexts, oral examinations to test depth of understanding, and in-class discussions where devices are put away and ideas are exchanged in real time.

Even longstanding assessment formats like individual essays may need to be reconsidered, he said.

Dr Thijs Willems, a research fellow at the Lee Kuan Yew Centre for Innovative Cities at SUTD, said that while essays, presentations and prototypes still matter, these are no longer the sole markers of achievement.

More attention needs to be paid to the originality of ideas, the sophistication with which AI is prompted and questioned, and the human judgment used to reshape machine output into something unexpected, he said.

These qualities “surface most clearly in reflective journals, prompt logs, design diaries, spontaneous oral critiques, and peer feedback sessions”, he added.

SUSS Associate Professor Wang Yue, head of the Doctor of Business Administration Program, said undergraduates should already have basic cognitive skills and foundational knowledge.

“AI frees us to focus on higher-order thinking like developing insights and exercising wisdom,” she said, adding that restricting AI would be counterproductive to preparing students for the workplace.

Critical thinking needed more than ever

The same speed that makes AI exciting is also its potential hazard, said Dr Willems, warning that learners who treat it as a “one-click answer engine” risk accepting mediocre work and weakening their own understanding.

The key is to focus on the quality of human and AI interaction, he said. “Once learners adopt the stance of investigators of their own practice, their critical engagement with both technology and subject matter deepens.”

Dr Jean Liu, director of the Center for Evidence and Implementation and adjunct assistant professor at the NUS Yong Loo Lin School of Medicine, said that while AI offers major advantages for learning, universities must clearly define the line between acceptable use and academic dishonesty.

“AI can act as a tutor who provides personalized explanations and feedback… or function as an experienced mentor or thought partner for projects,” she said.

But the line is drawn when students allow AI to do the work wholesale.

“In an earlier generation, a student might pay a ghost writer to complete an essay,” Dr Liu said.

“Submitting a ChatGPT essay falls into the same category and should be banned.

“In general, it is best practice to come to an AI platform with ideas on the table, not to have AI do all the work. Helping students find this balance should be a key goal of educators.”

Universities must be upfront about what kinds of AI use are acceptable for students, and provide clearer guidance, she added.

Dr Jason Tan, associate professor for policy, curriculum and leadership at the National Institute of Education, said the rise of AI is testing students’ integrity and sense of responsibility.

Over-reliance on AI tools could also erode critical thinking, he added.

“Students have to decide for themselves what they want to get out of their university education,” he said.

ADVERTISEMENT

ADVERTISEMENT