As a kid, before the internet was everywhere, I was pretty much “held hostage” every Lunar New Year by Channel TV2 to watch Stephen Chow’s “The God of Cookery.” The grand finale features a culinary showdown with the villain. The bad guy serves up a high-class, luxurious Buddha Jumps Over the Wall, while Chow counters with something deceptively simple yet deeply touching: Char Siu Rice, which he names “Soul-Stirring Rice.” Against all odds, it’s this dish that wins, reducing judges and audience members alike to tears.

Let’s use this Soul-Stirring Rice as a launching pad to discuss how AI is trained.

Limitations of traditional programming

Firstly, let’s consider traditional computer programming.

Here, the computer acts essentially as a puppet, mimicking precisely the set of explicit human-generated instructions.

Take a point-of-sale system at a supermarket as an example: scan a box of Cheerios, and it charges $3; scan a Red Bull, it’s $2.50.

This robotic repetition of specific commands is probably the most familiar aspect of computers for many people.

This is akin to rote learning from a textbook, start to finish.

But this programmed obedience has limitations—similar to how following a fixed recipe restricts culinary creativity.

Traditional programming struggles when faced with complex or extensive data.

A set recipe may create a delicious Beef Wellington, but it lacks the capacity to innovate or adapt.

Furthermore, not all data fits neatly into an “A corresponds to B” model.

Take YouTube videos: their underlying messages can’t be easily boiled down into basic algorithms.

This rigidity led to the advent of machine learning or AI, which emerged to discern patterns in data without being explicitly programmed to do so.

Remarkably, the core tenets of machine learning are not entirely new.

Groundwork was being laid as far back as the mid-20th century by pioneers like Alan Turing.

Laksa — Penang + Ipoh

During my childhood, my mother saw the value in non-traditional learning methods.

She enrolled me in a memory training course that discouraged rote memorization.

Instead, the emphasis was on creating “mind maps” and making associative connections between different pieces of information.

Machine learning models operate on a similar principle. They generate their own sort of “mind maps,” condensing vast data landscapes into more easily navigated territories.

This allows them to form generalizations and adapt to new information.

For instance, if you type “King – Man + Woman” into ChatGPT, it responds with “Queen.”

This demonstrates that the machine isn’t just memorizing words, but understands the relationships between them.

In this case, it deconstructs “King” into something like “royalty + man.”

When you subtract “man” and add “woman,” the equation becomes “royalty + woman,” which matches “Queen.”

For a more localized twist, try typing “Laksa – Penang + Ipoh” in ChatGPT. You’ll get “Hor Fun.” Isn’t that fun?

Knowledge graphs and cognitive processes

Machine learning fundamentally boils down to compressing a broad swath of world information into an internal architecture.

This enables machine learning to exhibit what we commonly recognize as “intelligence,” a mechanism strikingly similar to human cognition.

This idea of internal compression and reconstruction is not unique to machines.

For example, a common misconception is that our eyes function like high-definition cameras, capturing every detail within their view.

The reality is quite different. Just as machine learning models process fragmented data, our brains take in fragmented visual input and then reconstruct it into a more complete picture based on pre-existing knowledge.

Our brain’s role in filling in these perceptual gaps also makes us susceptible to optical illusions.

You might see two people of identical height appear differently depending on their surroundings.

This phenomenon stems from our brain’s reliance on built-in rules to complete the picture, and manipulating these rules can produce distortions.

Speaking of rule-breaking, recall the Go match between AlphaGo and Lee Sedol.

The human side was losing until Sedol executed a move that AlphaGo’s internal knowledge graph hadn’t anticipated.

This led to several mistakes by the AI, allowing Sedol to win that round.

Here too, the core concept of data reconstruction is at play.

Beyond chess: The revolution in deep learning

The creation and optimization of knowledge graphs have always been a cornerstone of machine learning.

However, for a long time, this area remained our blind spot.

In the realm of chess, before the advent of deep learning, we leaned heavily on human experience.

We developed chess algorithms based on what we thought were optimal rules, akin to following a fixed recipe for a complex dish like Beef Wellington.

We believed our method was fool-proof.

This belief was challenged by Rich Sutton, a luminary in machine learning, in his blog post “The Bitter Lesson.”

According to Sutton, our tendency to assume that we have the world all figured out is inherently flawed and short-sighted.

In contrast, recent advancements in machine learning, including AlphaGo Zero and the ChatGPT you’re interacting with now, adopt a more flexible, “Char Siu Rice” approach.

They learn from raw data with minimal human oversight.

Sutton argues that given the continued exponential growth in computing power, evidenced by Moore’s Law, this method of autonomous learning is the most sustainable path forward for AI development.

While the concept of computers “learning on their own” might unnerve some people, let’s demystify that notion.

Far from edging towards human-like self-awareness or sentience, these machines are engaging in advanced forms of data analysis and pattern recognition.

Machine learning models perform the complex dance of parsing, categorization, and linking large sets of data—akin to an expert chef intuitively knowing how to meld flavors and techniques.

These principles are now entrenched in our daily lives.

When you search for something on Google or receive video recommendations on TikTok, it’s these very algorithms at work.

So, instead of indulging in unwarranted fears about the future of machine learning, let’s appreciate the advancements that bring both simplicity and complexity into our lives, much like a perfect bowl of Char Siu Rice.

Read also:

- AI, ChatGPT, and my mom’s Roomba

- On AI and the soul-stirring char siu rice

- Redefining education in the AI era: the rise of the generalists

- AI in astronomy: Promise and the path forward

- Unpacking AI: Where opportunity meets responsibility

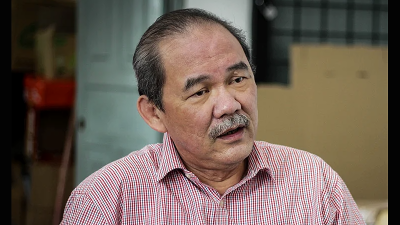

(Yuan-Sen Ting graduated from Chong Hwa Independent High School in Kuala Lumpur before earning his degree from Harvard University in 2017. Subsequently, he was honored with a Hubble Fellowship from NASA in 2019, allowing him to pursue post–doctoral research at the Institute for Advanced Study in Princeton. Currently, he serves as an associate professor at the Australian National University, splitting his time between the School of Computing and the Research School of Astrophysics and Astronomy. His primary focus is on utilizing advanced machine learning techniques for statistical inference in the realm of astronomical big data.)

ADVERTISEMENT

ADVERTISEMENT